AI-produced music using the voices of popular music artists has gone viral on social media, fooling some people into believing a new song—like one by the Weeknd and Drake—is authentic, but some experts believe it is illegal and constitutes copyright infringement.

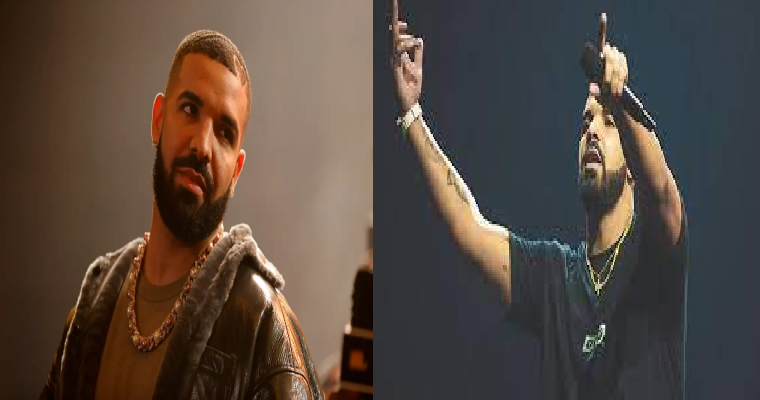

Drake performs at the 2017 Coachella Music Festival.

Drake performs at the 2017 Coachella Music Festival.

AI-generated music has become the latest social trend—it involves either having a famous artist cover a popular song, or creating a new song that sounds exactly like the artist performed it.

A TikTok video featuring an AI-generated version of Drake, Kendrick Lamar and Ye (formerly known as Kanye West) singing “Fukashigi no Karte,” a theme song of a popular anime series, went viral with over two million likes and comments like: “This is the good part of AI they don’t talk about.”

Another wildly popular video was made by the anonymous Tiktok creator, Ghostwriter, and featured an AI-created original song by the Weeknd and Drake called “Heart on my Sleeve,” and racked up millions of streams before platforms like Spotify, Apple Music, Youtube and TikTok removed it.

According to a report by the Financial Times Universal Music Group, a popular music company that represents big artists like Drake, the Weeknd and Ariana Grande, sent a letter to streaming services asking them to block AI platforms from using their services to train on the lyrics and melodies of copyrighted songs.

Some artists have capitalized on the opportunity, like singer Grimes, who announced anyone could use AI to create songs using her voice “without penalty,” she just requires a 50% split on the royalties, Rolling Stone reports.